Computing in a Post-Fact World - Who Is An Authority?

Who in an "authority" in computing? If specifications and textbooks disagree, who can adjudicate?

Those of us who are getting longer in the tooth will remember the Key Stage 3 National Strategy. It was designed to help "non-specialists" teach ICT and was controversial in a number of ways - it didn't cover the National Curriculum, for example, but it also included things that weren't in the National Curriculum, and despite being "non-statutory" it became compulsory in a number of schools.

It gave us Pat's Poor Presentation and ultimately paved the way for portfolio-based qualifications and the demise of ICT as a subject, but it wasn't entirely the devil's work - I did use a couple of the presentations, including one on "authority":

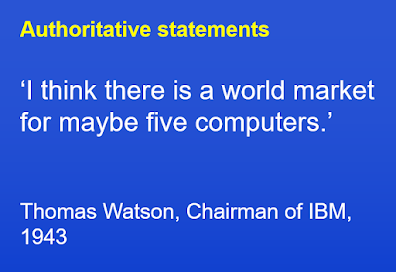

The idea was that the origin of a statement would tell us how trustworthy it was - we could trust an "authority" on a particular subject.

But who is an authority in Computing?

When I was a student, and up until the end of the last century, our research was done in libraries. If someone had their thoughts or work published in a book then it appeared that they were an expert in their subject.

All that's changed in the internet age. We switched to doing our research on-line, and at first I had the same thought - that anyone whose name appeared on a website must be an authority - but as we now know, when it comes to the internet, it's often the case that he who shouts loudest is the first to be heard.

By way of illustration; I don't consider myself to be an "authority" on Computing (except in a very local sense), but a quick search for my name revealed the following citations:

In a paper from Trident University International, I learnt of my discovery, in 2016, that computers use binary:

In Gary Beauchamp's book "Computing and ICT in the Primary School: From pedagogy to practice", I found that I'd provided a definition of Computing:

I discovered that suggested reading for the Computer Science course at New York University includes a link to a blog that I wrote for Computing teachers in England:

Finally, I also found links to my teaching resources in schemes of work for Somerset and the state of Victoria (in Australia), as well as links from various individual schools. While I hope that people find those things helpful, it has made me think more carefully about the nature of authority.

I don't want to name names, but you can probably think of your own examples of people who appear to have become authorities in particular areas despite having no formal training in them.

So why does this matter? Well, this blog was prompted by a question in social media about how to represent a negative number in normalised binary form. If you've taught A level and tried to look for examples, you'll be aware that there isn't agreement on how to do it, which is why my website includes two different methods.

My old Computer Science textbook from the 80s, the Cambridge International A level textbook and most of the examples that you can find on the web normalise first and then find the two's complement. The OCR resources, and a small number of examples found elsewhere, find the two's complement first and then normalise. A lot of the time you get the same answer, but not always.

To me, the former method makes more sense, otherwise you're normalising based on the two's complement representation of the number rather than the size of the number itself (and also it's the method I encountered first!). I'd also never heard of the rule that says the first two digits need to be different.

So which is right? Who is the authority on techniques in Computer Science? To whom can we refer for adjudication? Does anyone even know who invented normalised binary so that we can go back to the original source?*

If only there was an authority for standards that could provide us with definitions! Something like the International Electrotechnical Commission (IEC). They publish standards for electrical and electronic technology, ranging from the shape of plugs to the graphical symbols used on equipment. But what happens when an authority changes its mind?

My first computer, a ZX-81, had one kilobyte of RAM. I knew that it was 1024 bytes of memory. When I started my O level Computer Studies course in 1983 a kilobyte was 1024 bytes. By the time I graduated in 1990, the kilobyte still contained 1024 bytes, as it did throughout my time in the software industry for most of the 90s. An "authority" during this time would have correctly asserted that a kilobyte was 1024 bytes, and presumably it's still perfectly possible to find documents from this time.

Then, in December 1998, the IEC decided that a kilobyte should be 1000 bytes to make it consistent with kilograms and kilometres. Firstly, according to Wikipedia, that's only a "recommendation". Secondly, how was that change communicated?

Like most people, I don't get up in the morning and feel the need to check that water still boils at 100°C or that light hasn't slowed down overnight, so why would I think to check whether the definition of the kilobyte had changed? It's something I'd known for nearly twenty years.

In fact, even as an ICT/Computing teacher and someone who is more-than-averagely interested in technology, that news didn't reach me for about 15 years, during which time I'd been confidently telling everyone that there are 1024 bytes in a kilobyte.

To confuse matters further, in 2006 there was a court case involving hard-disc manufacturer Western Digital. A disgruntled customer was unhappy that his new hard disc only contained 1000 bytes per kilobyte instead of the 1024 he was expecting, and claimed that he'd been short-changed as a result. Despite it being eight years since the IEC changed the definition, the court ruled in the customer's favour because most people still think that there are 1024 bytes in a kilobyte.

So who's the authority? The IEC or the courts?

Luckily for us, some exam boards allow both definitions, but more than twenty years after the change both are still widely used - Windows File Explorer uses 1024, but the file manager in Ubuntu uses 1000, the packaging for my new SanDisk SD card says that they're using 1000, but when I put the 32Gb card in my new Canon camera it says that it's 29Gb.

Sometimes I wonder whether living in a post-fact world would be easier?

* By strange coincidence, whilst writing this article I searched for normalised binary in Bing, and what should appear at the top of the search results?

Maybe I'm more of an authority than I thought!

November 2020 Update

Since I wrote this article, only about six weeks ago, I've become aware of further issues:

- The TES has now deleted all of the "Subject Genius" articles from its website. This includes some of those cited in the examples above, which means that we've reached a stage where authoritative statements can disappear within five years, in the way that books and papers don't.

- Some exam boards have updated their specifications for 2020, now stating that ASCII is an eight-bit code and removing references to "extended ASCII". Regardless of whether it is factually questionable, it means that students' understanding of this computing "fact" will depend on which GCSE they took and in which year.

This blog was originally written in September 2020.